Neural AutogrindGoal

Allow the player to effectively play the digital game Maplestory without using their limbs.

Research

Reference:

Tetris game (Team NeuroTetris - WI 2020)

Used neurosky to run together with the game

Dino Jump Game (Team Dino Jump - F 2020)

Literature Examples

Hafeez T, Umar Saeed SM, Arsalan A, Anwar SM, Ashraf MU, Alsubhi K (2021) EEG in game user analysis: A framework for expertise classification during gameplay. PLoS ONE 16(6): e0246913. https://doi.org/10.1371/journal.pone.0246913

Measured EEG data during Temple Run gameplay

Mallapragada, Chandana, "Classification of EEG signals of user states in gaming using machine learning" (2018). Masters Theses. 7831. https://scholarsmine.mst.edu/masters_theses/7831

Ties three common states during game to machine learning

How it works

The player would create a training route using SSVEP signals

The player could decide when to edit their route by triple blinking

The player would then start their training session by double blinking

The training session could be stopped by double blinking again

Hardware & Software

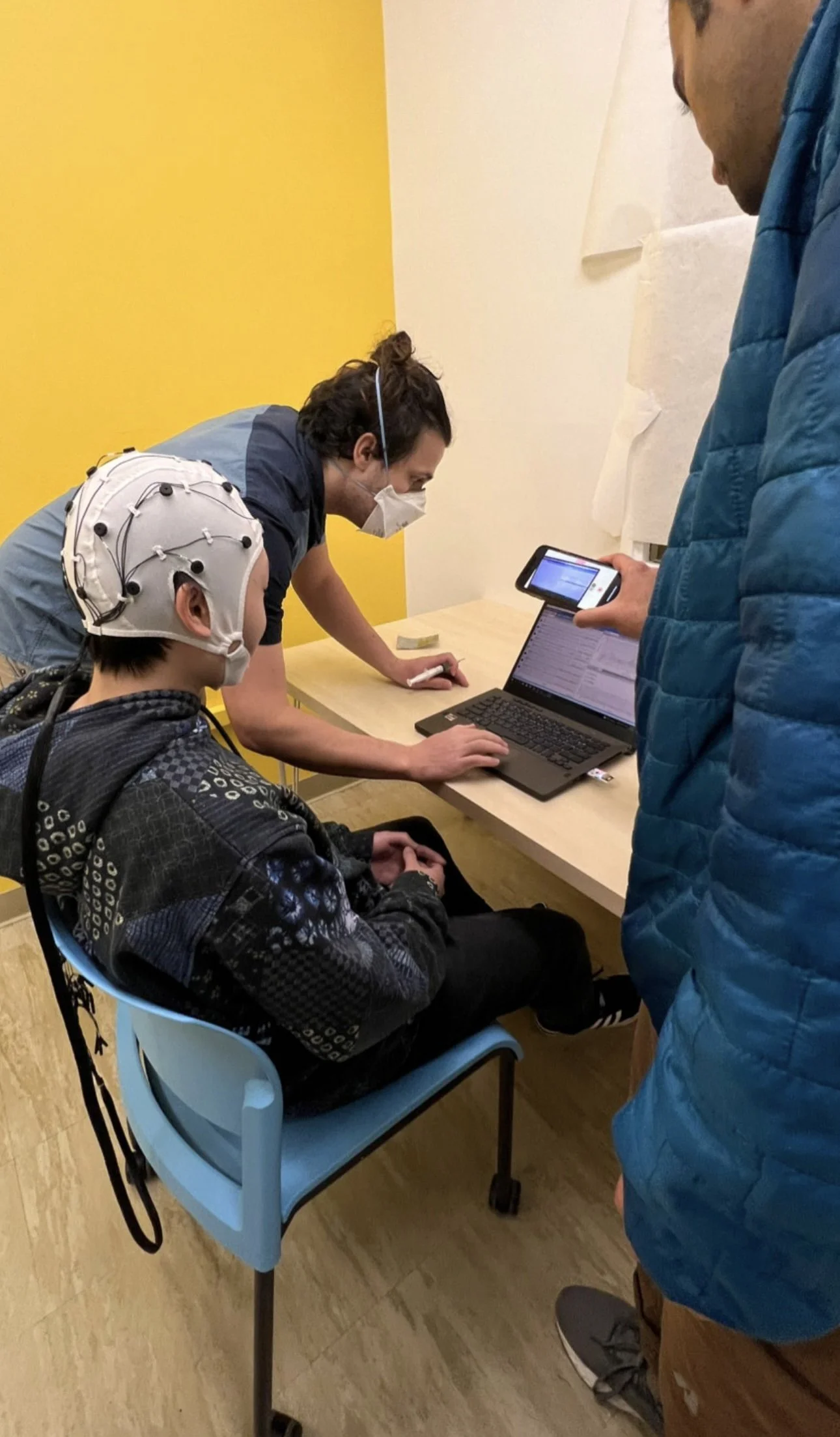

Hardware

Cyton Board (8 Channels)

EEG Electrode Cap (with Signagel)

Windows Laptop (Windows 10)

For collecting data and running scripts

Software

Stimulus: Pygame

Sending EEG signal and markers: Pylsl, OpenBCI GUI

EEG data processing: Scipy.signal

Game automation: PyDirectInput

Methods

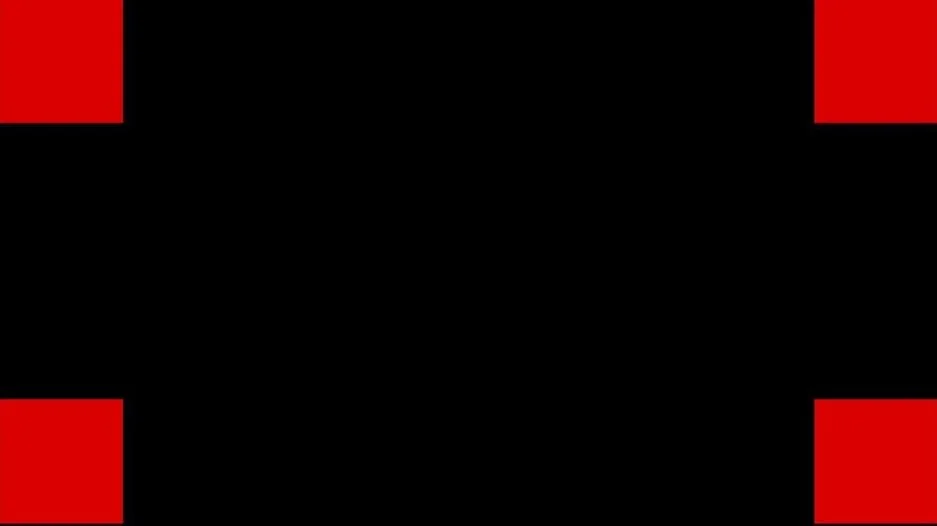

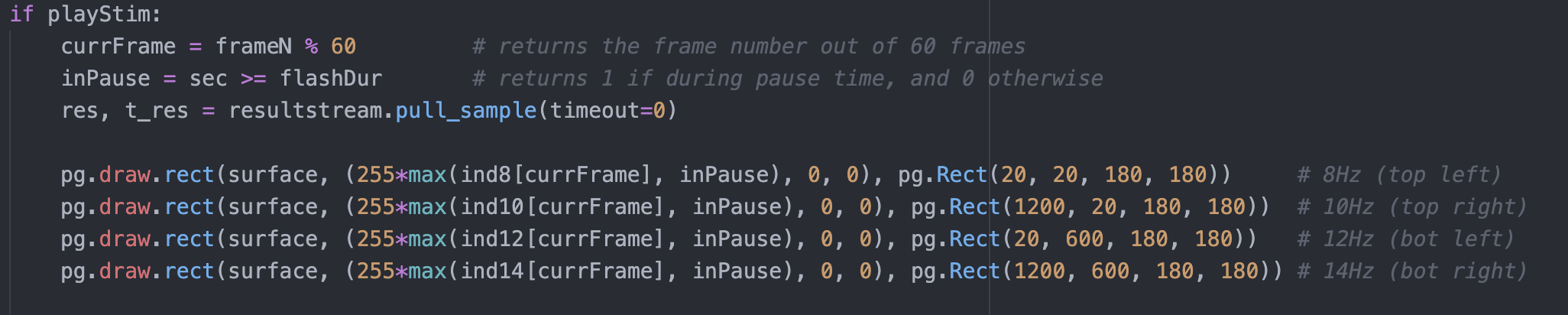

The visual stimuli was created using the approximation method made by Dr. Nakanishi

Computer screen, each red box flashes at different rates

Creating Visual Stimuli

Using the Pygame module, the color of the rectangles are changed according to the approximation indices and whether the flash period has passed.

Using LSL Streams

Two main components

Visual Stimuli & automation script

Backend

The stimuli & automation script

Sends out markers to the backend indicating when to store data and when to process and send results

Receives SSVEP results from backend

Backend

Receives EEG data from OpenBCI GUI via LSL

Receives markers from the stimuli & automation script

Sends out results to the stimuli & automation script after processing the EEG data

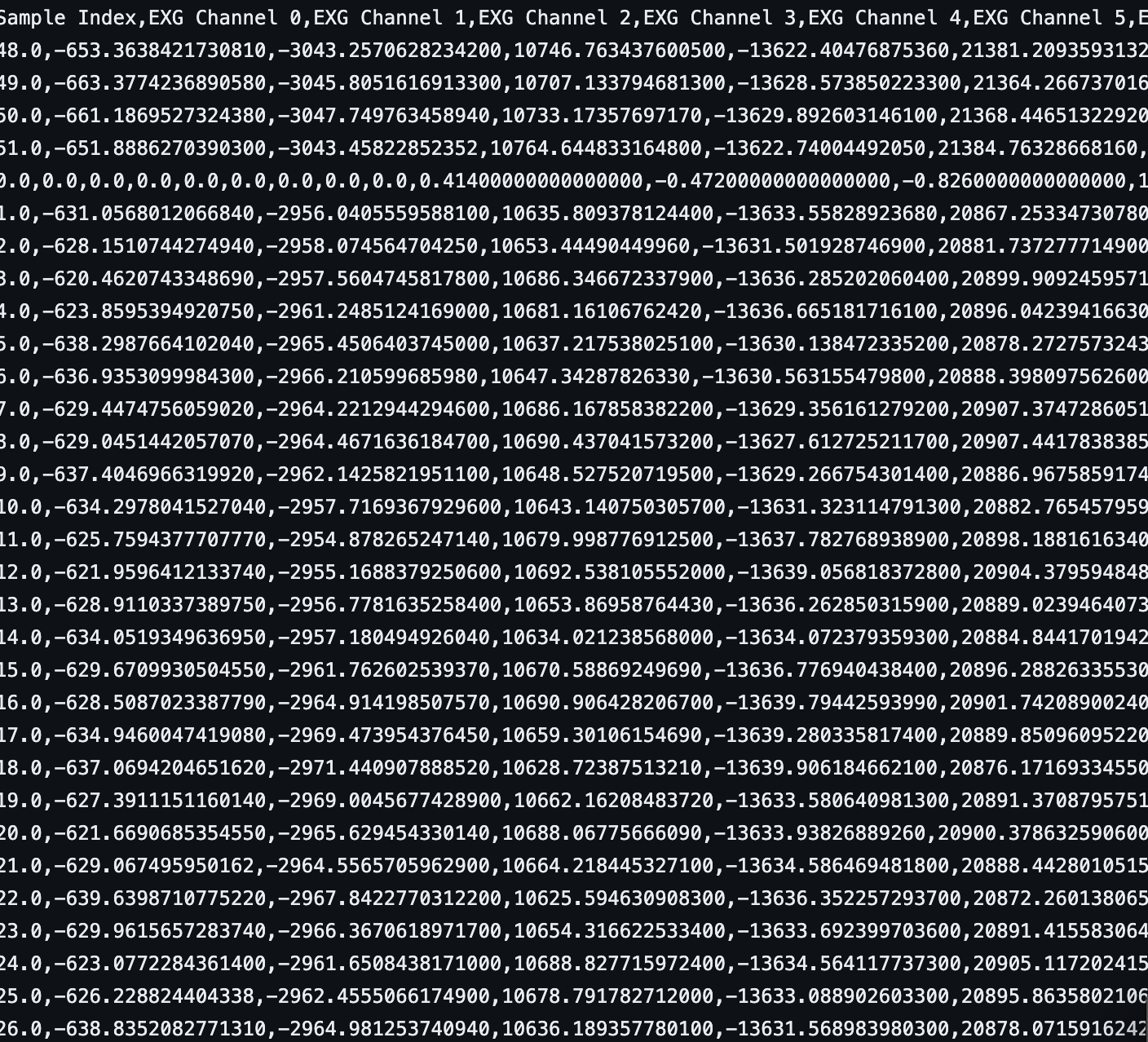

Procedure & Data Collection

While wearing the EEG Electrode cap, the user focused on one of the four squares that flashed at four different Hz.

We recorded for the SVEEP signal on channel 01 & blinks on channel 04.

The user focused on each flashing square for 30 seconds.

Each Hz recording was saved to a different csv.

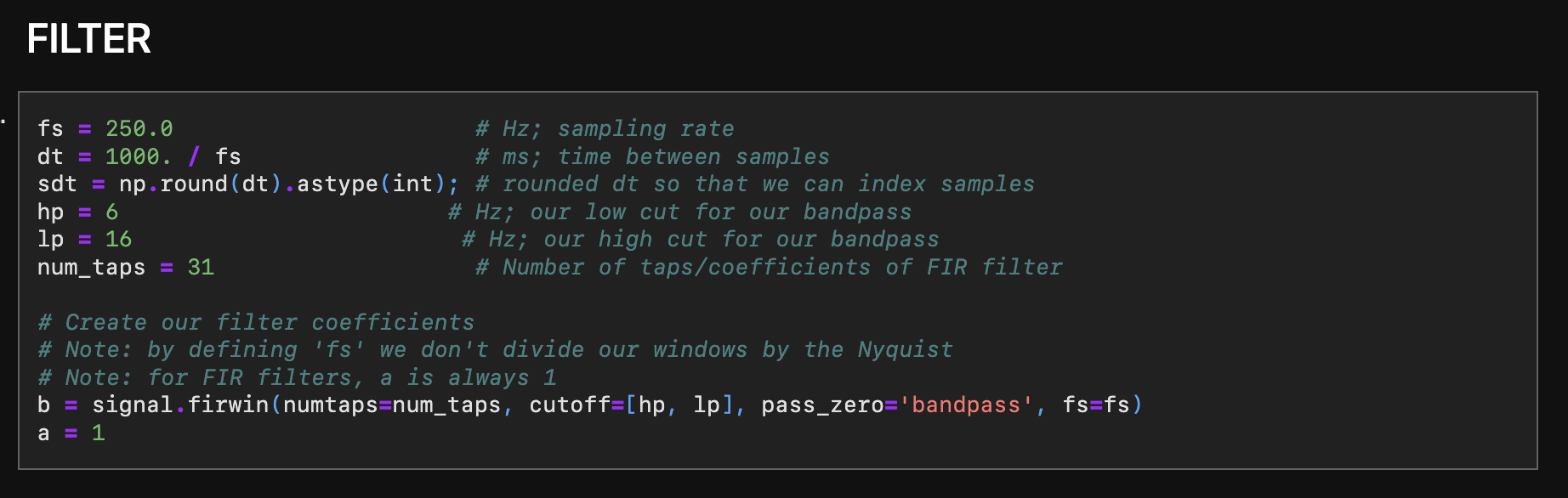

Filtering

When first looking at the estimated power spectral density of our data we saw noise and other anomalies that we needed to filter out. We used a non-causal zero phase filter, adapted from assignment three. Except we changed the sampling rate to 250 hz and the high pass to 6 and low pass to 16 since we were looking at signals in the range from 8hz to 14hz.

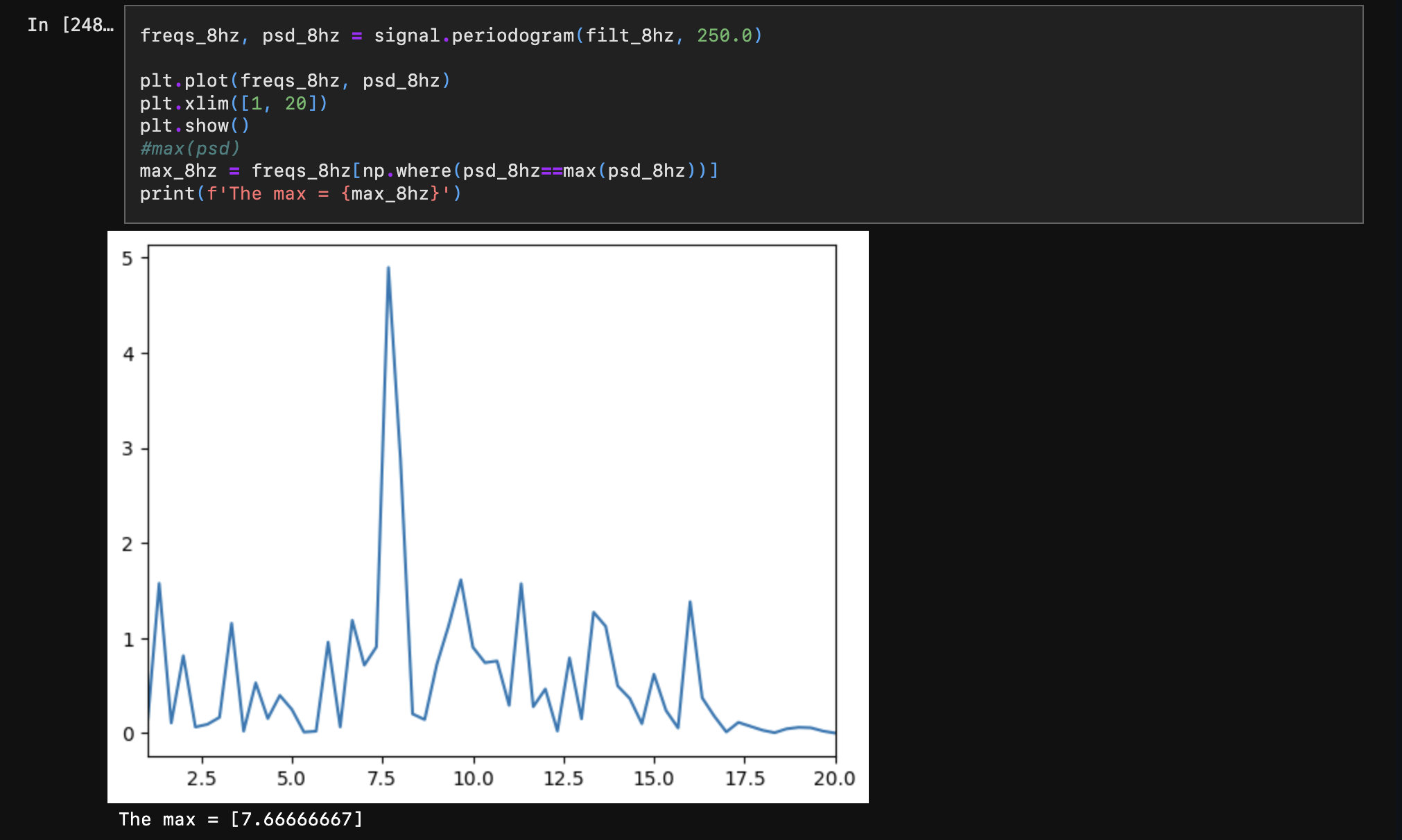

PRE-FILTERED 8HZ CHANNEL 01 - estimated power spectral density

Viewing the power spectral density after filtering, we can see our max peak will be much closer to what we expect. We found our max peak to be 7.66 which is within a reasonable range for the 8hz channel.

8hz of the estimated power spectral density post filtering

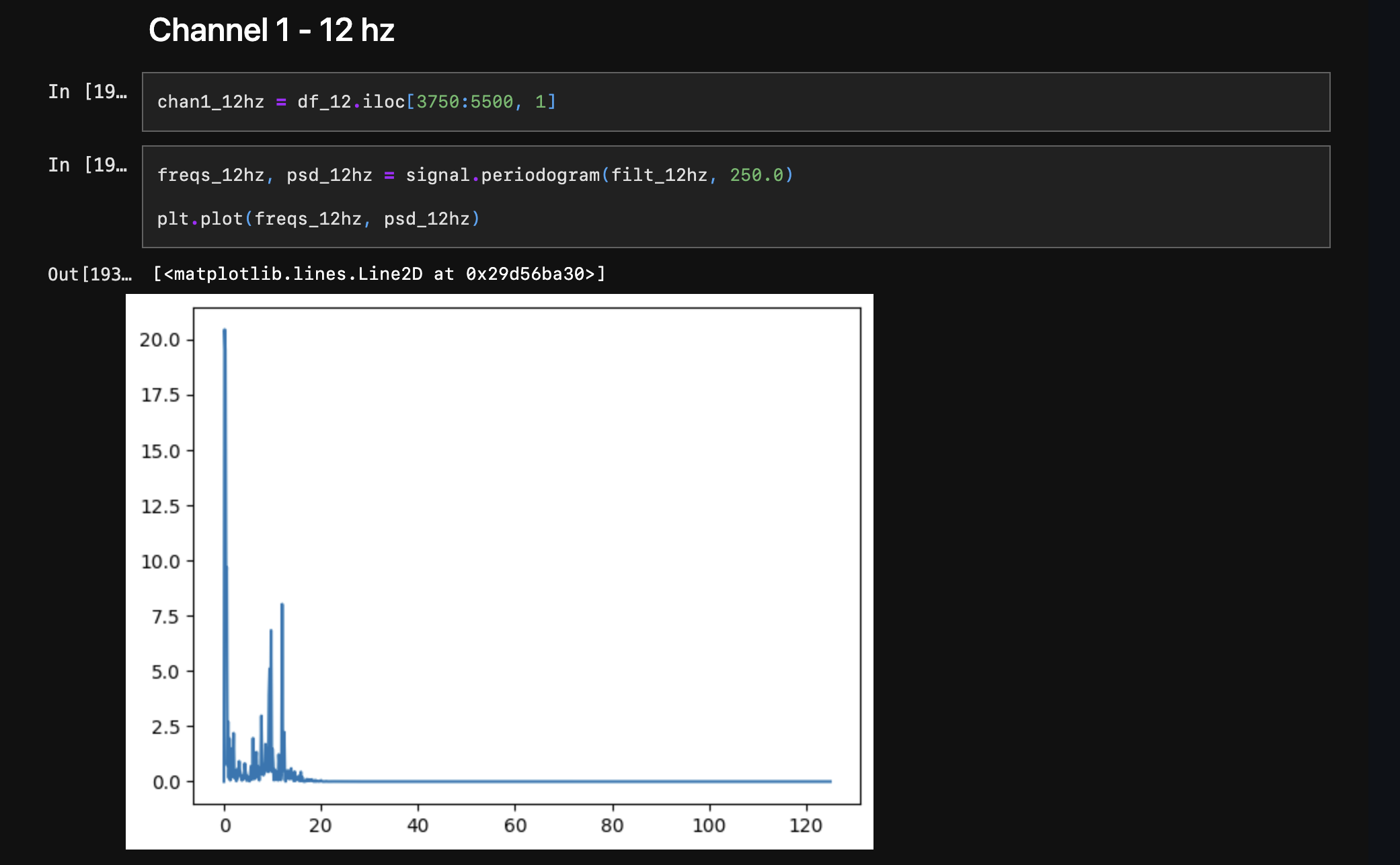

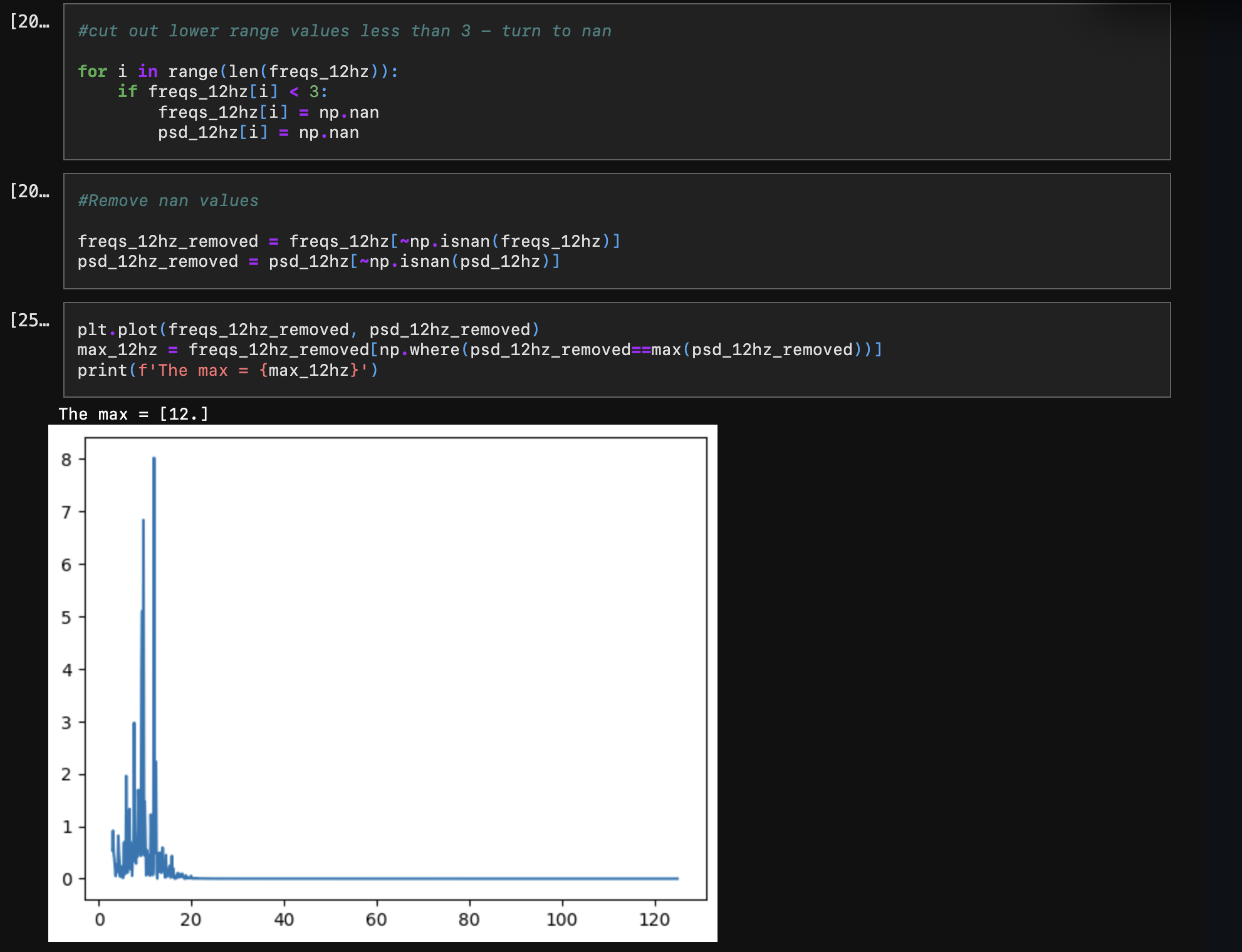

With the 10, 12, and 14hz channels we needed to do extra filtering to remove an unwanted low end signal. When finding the max peak, an anomaly happened at 0.14, so we had to filter that out. We just looped through the frequency and anything that was below 3hz we removed, we of course also removed the corresponding power spectral density values as well - and we were able to get a more accurate reading. And on the 12hz we found our max peak dead on at 12.

Power spectral density before removing unwanted low end signal - unwanted max peak is at 0.14

Power spectral density after - giving us a max peak at 12

8hz, 10hz, & 12hz, signals we were able to get an accurate reading. 14hz signal was a bit off.

Results

Created visual stimuli that flash at 8, 10, 12, and 14 Hz using Pygame

Though might not be stable due to frame drops

We were able to process SSVEP signals from EEG data in real time (this took a lot of trials and time)

Not very stable

We could translate the SSVEP signals into a sequence of automated character movements

The character in Maplestory was able to consistently play the sequence of automated character movements repeatedly

When finding “max peaks” the 14hz was not accurately recorded or filtered.

It may have been a monitor refresh rate issue, external interference (laptop charging), or not enough/too much gel.

Improvments

Implement blink detection to further automate the training process

Using our system, the player would only need to be able to control their eyes to interact & play the game (Maplestory), allowing people who suffer from quadriplegia to enjoy playing PC games without trouble.

Applying a non-causal, zero phase filter